The technology behind deep learning algorithms, which has made waves over the last few years, isn’t entirely understood by the people who build it. In fact, it’s never been fully understood why the neural networks work, or how they are so accurate.

The main question that baffles AI experts is this: what is it about deep learning that enables generalisation? How, after feeding in thousands of photos of dogs, are the neural networks able to accurately identify the dogs in the pictures by breed and age, for example? And, if this technology is so accurate, does it mean that our brains apprehend reality in the same way?

A recent blog post from Quanta magazine might shed some light on the subject. Neural networks are built based on assumptions about how the natural brain works, by emulating layers of neurons. When they fire, they send the signal to connected neurons in the above layer, processing input signals and producing the output signals.

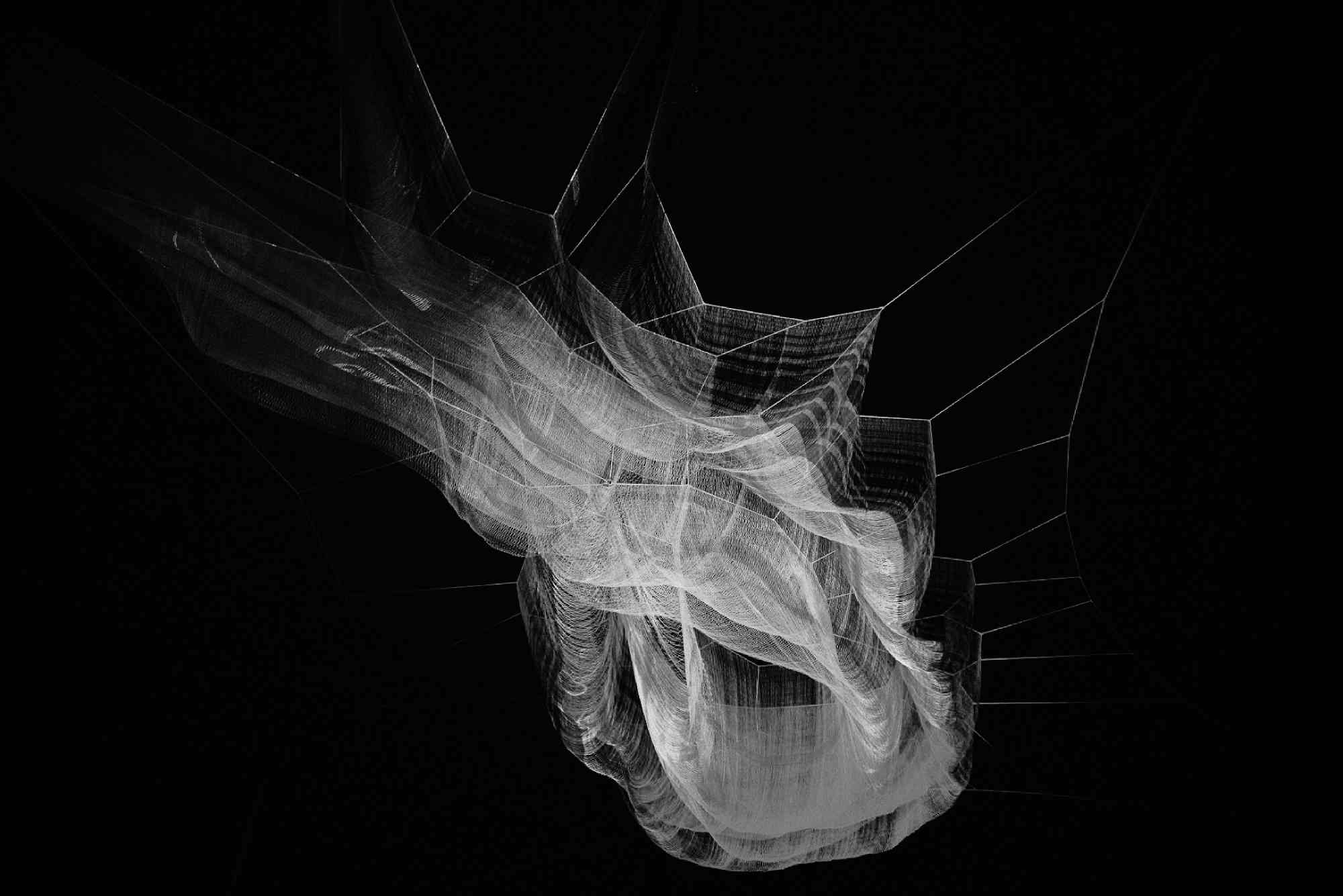

According to researchers from the University of Jerusalem, new evidence suggests that neural networks learn according to a process called the “information bottleneck”. The neural networks squeeze as much information as possible into each layer, removing some unnecessary detail, but maintaining the ability to accurately predict labels. In practical terms, this means that the layers of neural networks are able to forget or ignore some information. For example, when we feed in the thousands of dog photos, some of them might also have a garden or house in the background, or other elements that are fundamentally not important in order to complete the task of recognising a dog in a picture, so the neural network will only zoom in on details specific to the dog.

Some of the peer reviews of the abovementioned paper have stated that the researchers behind it might be on to something, particularly when it comes to opening the black box of neural networks. However, they stress that human brains are much bigger black boxes which contain 86 billion neurons, and even if infantile learning processes somewhat resemble how deep learning works, machines are unable to capture the full picture of what is happening. For example, children don’t need to see thousands of examples of handwritten letters to be able to recognise them and write them; sometimes they just need to see something once and learn from it.

This is inspiring for AI researchers as it shows we’re really in the early stages of machine learning and that there’s plenty of room to grow. One of the objectives now is to shorten the path to generalisation and be able to train models on smaller data sets, producing better results – just as human brains would.